Jayjun Lee

Welcome! I'm a PhD student in Robotics at the University of Michigan. I'm fortunate to be advised by Professor Nima Fazeli and to be a member of the Manipulation and Machine Intelligence (MMINT) Lab. My research focuses on Robot Learning – developing algorithms for robots to perceive and interact with the physical world. These days I'm interested in multi-modal (i.e. vision, tactile, F/T, language, audio) perception and learning representations to acquire contact- and force-rich manipulation skills.

Jayjun Lee is a 2nd Year MS Robotics student at the University of Michigan – Ann Arbor, working in the Manipulation and Machine Intelligance (MMINT) Lab. Previously, he worked with Prof. Joyce Chai in the Situated Language and Embodied Dialogue (SLED) Lab. He received his Bachelors in Electronic and Information Engineering at Imperial College London, where he was advised by Prof. Ad Spiers in the Manipulation and Touch Lab. He is broadly interested in robot learning, robotic manipulation, and spatial intelligence.

Formal Bio Github Google Scholar Twitter

Email: jayjun [at] umich [dot] edu

Sep. 2025

I'm co-organizing Human-to-Robot workshop at CoRL 2025!Aug. 2025

AimBot is accepted to CoRL 2025 in Seoul, Korea!Apr. 2025

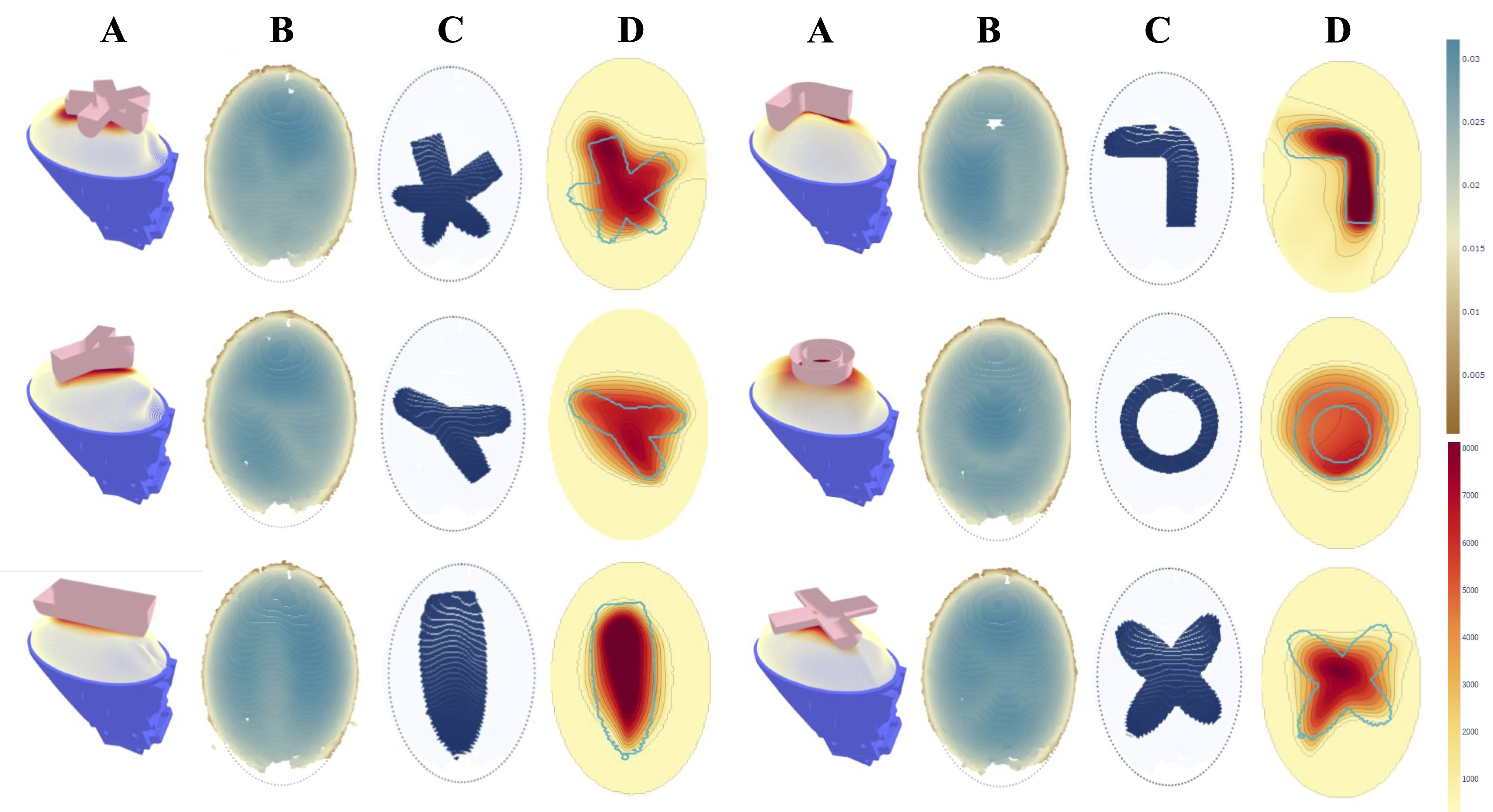

ViTaSCOPE is accepted to RSS 2025 in Los Angeles!Jan. 2025

RACER is accepted to ICRA 2025 in Atlanta!Jan. 2025

COMFORT is accepted to ICLR 2025 in Singapore!Oct. 2024

RACER won the Best Overall Award at UM AI Symposium 2024!Sep. 2024

NISP is accepted to CoRL 2024 in Munich, Germany!

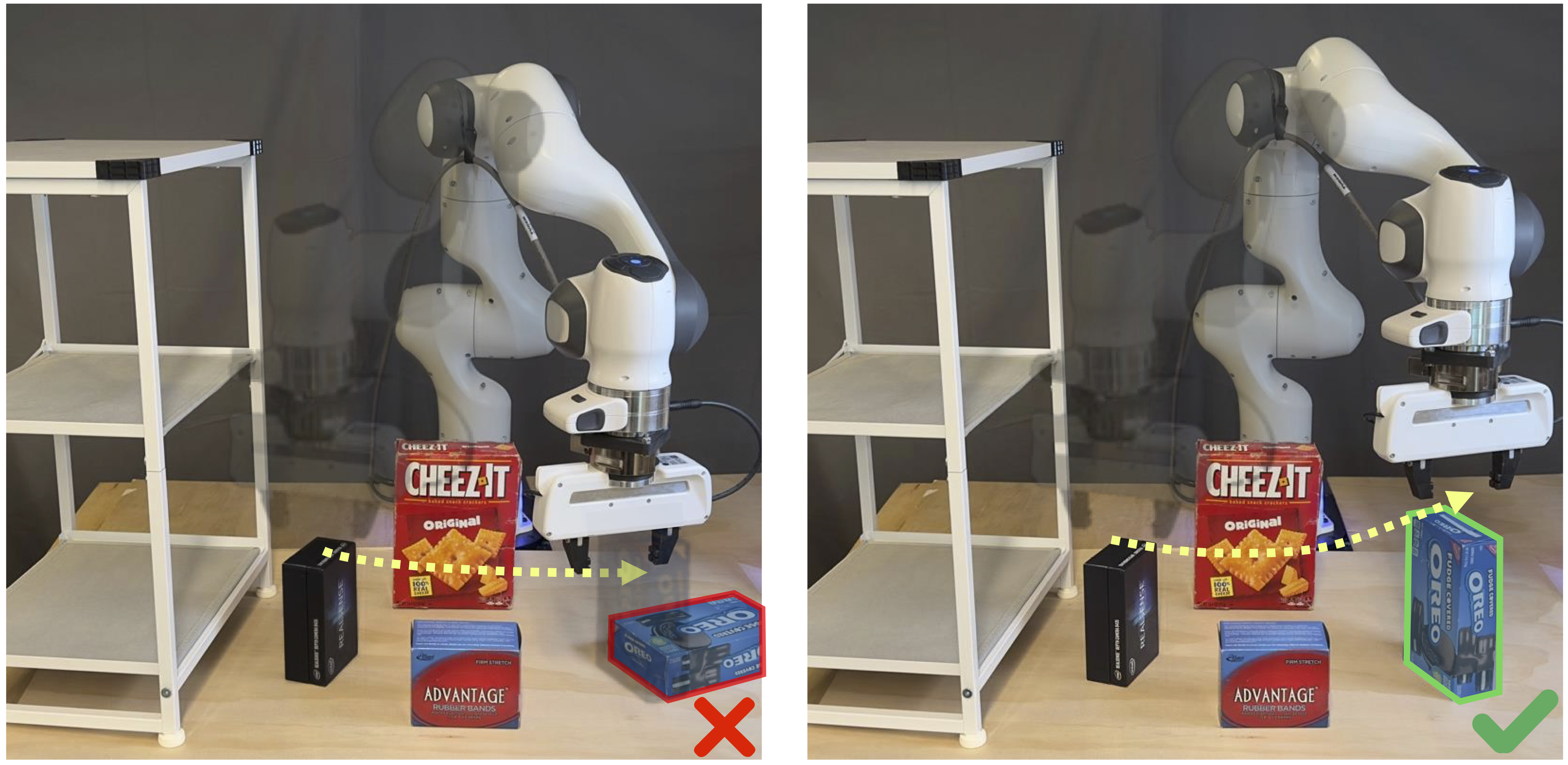

RACER: Rich Language-guided Failure Recovery Policies for Imitation Learning

*,

*,

, and

★ Best Overall Award @ UM AI Symposium 2024 ★

The 3rd Workshop on Language and Robot Learning (LangRob) @ CoRL 2024

International Conference on Robotics and Automation (ICRA) 2025

Webpage •

Paper •

Code

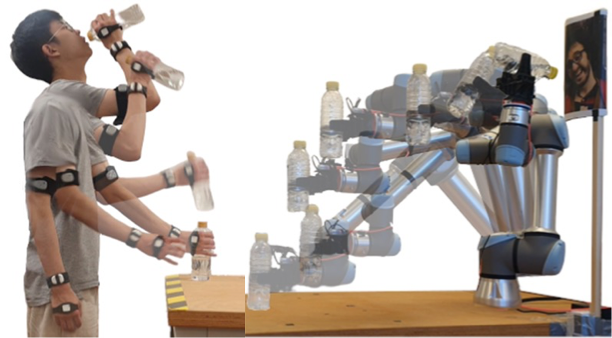

Naturalistic Robot Arm Trajectory Generation via Representation Learning

and Adam Spiers

UK-RAS Towards Autonomous Robotic Systems (TAROS) 2023

PDF